Open Shading Language for Blender is now also available on BlenderMarket:

(click on the image to go to the shop where you can read the description and download a sample chapter)

shader dtnoise(

point Pos = P, // texture position and scale

float Scale = 1,

int Np = 15, // number of ticks (impulse) per cell

int Seed = 42, // random seed

float Radius = 0.3, // distance to impulse that will add to result

float Size = 0.7, // length of an impulse

vector Dir = vector(1,0,0),// direction of anisotropy

float Theta = M_PI/2, // cone (half)angle. The default = 90% which means no anisotropy

float Step = 0.3, // cutoff value for Fac output

float Gain = 0.3, // brightness of the result

output float Sum = 0, // the sum of all the tick values

output float Fac = 0 // the

){

point p = Pos * Scale;

point f = floor(p);

vector an= normalize(Dir);

vector r = vector(an[2],an[1],an[0]); //no need to norm again

vector uv = cross(an,r);

vector vv = cross(an,u);

int xx,yy,zz, np;

int nn[3];

for( xx=-1; xx<=1; xx++){

for( yy=-1; yy<=1; yy++){

for( zz=-1; zz<=1; zz++){

point ff = f + vector(xx,yy,zz);

int s=Seed;

nn[0] = int(ff[0]);

nn[1] = int(ff[1]);

nn[2] = int(ff[2]);

for( np=0; np < Np; np++){

vector pd1 = noise("cell",ff,s);

vector pd2 = randomcone(uv, vv, an, Theta, nn, s+1);

point p1 = ff + pd1;

point p2 = p1 + Size * pd2;

float d = distance(p1,p2,p);

Sum += (1 - smoothstep(0, Radius, d));

s+=3;

}

}

}

}

Sum *= Gain;

Fac = smoothstep(0, Step, Sum);

}

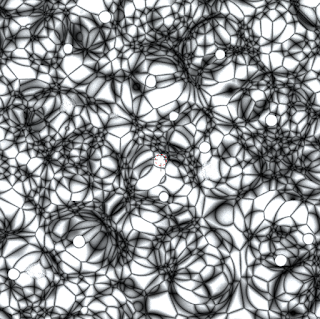

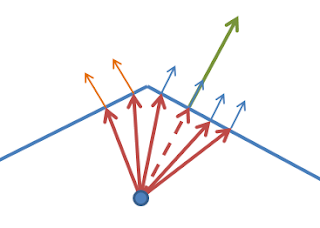

The essence is in line 44 and 45: here we pick a random point and another point along a random direction, where this random direction is restricted to a cone whose narrowness we can control with the Theta parameter. Those two points make up the start and end points of a line segment and if the distance to this line segment is small enough we a a value. (make sure that the sum of Size and Length < 1 to prevent artifacts.

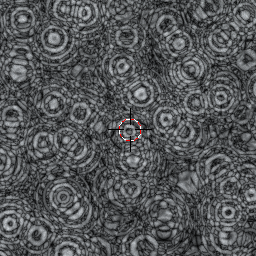

E can be made to look like anything from x-ray diffraction pattern(? at E=28) to stylized models of atoms (E=2.6):

The code for this metric(*) is this:length(d) + (1 + sin(e * length(d)))/2;

i.e. we basically add a sine component to the distance. If E is zero this metric reduces to a plain distance metric. (*) Note that this isn't a true metric in the mathematical sense as it does not satisfy the triangle inequality.

I did submit the path for review just now, if you're interested you can follow its fate here.

All of this can be overcome by using Open Shading Language, but the Cycles OSL implementation is limited to the CPU and cannot benefit from a much faster GPU. I therefore decided to try and implement it in the Blender source code and that went rather well (but see my rants below):

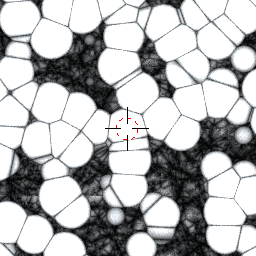

As you can see I added a second value output to the Voronoi node that yields the distance to the next nearest neighbour and added a dropdown to select the distance metric. Currently I have implemented the distance, distance squared (the default), manhattan and chebychev metrics, I might add the generalized minkowsky metric as well. The images show the F2 - F1 noise for the distance squared and manhattan metrics respectively:

The code works on GPU and on CPU but I only tested it on 64bits Linux (Ubuntu 15.04 to be precise) although there is no reason to believe it will work differently on antoher OS.

device_cuda.cpp, which i need to tweak because although I have a GTX970 (= SM_52 capable) card, I didn't upgrade my cuda drivers so i needed to hack the source to restrict the cuda compiler to sm_50. This is irrelevant to the patch itself. Some remarks about the code: coding this was not all pleasure and that is an understatement. No less than 8 files needed to be changed to alter a single node type. That isn't a bad thing in itself but there is duplicated code, a lot of redundant constants defined in different places and I could find no decent documentation on the stack based virtual machine (svm) that is used by Cycles. It took me way longer to fathom the ins and outs of the code than it took to write the code itself and I am still not 100% certain that I did not miss something. Blender is a wonderful piece of software but it certainly would benefit from a set of decent architecture docs :-)

shader marble (color Cin = .5,

float freq = 1.0,

output color result = 0)

{

float sum = 0;

float freqVal = freq;

point Pshad = transform ("object", P);

for (int i = 0; i < 6; i++)

{

sum = sum + 1/freqVal * abs(.5 - noise( 4 * freqVal * Pshad)) ;

freqVal = 2 * freqVal;

}

result = Cin * sum;

}

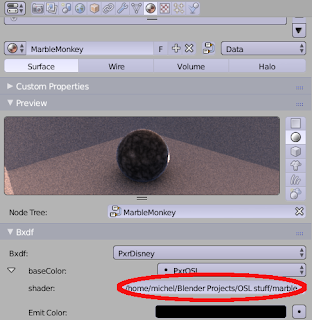

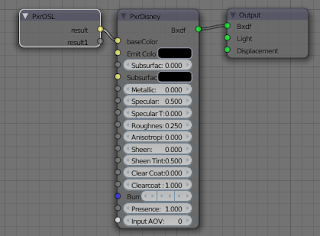

oslc compiler installed separately the easiest way to do this is to create a Cycles material first that contains a Script node. When you refer this Script node to an external .osl file it gets compiled immediately to an .oso file and this .oso file can be used as is by Renderman.

result1. The types and names of the input and output sockets are it seems defined in the file /opt/pixar/RenderManProServer-20.0/lib/RIS/pattern/Args/PxrOSL.args and are the same for all OSL shaders. So as far as I understand it, you can use different shaders but they all should use the same names for their input and output sockets (I think this is in line with other Renderman patterns). For this example it meant that I had to rename the output color from Cout to result to get it working (otherwise you get a rendertime error complaining it cannot link to the result socket). Maybe I am looking at it from the wrong perspective as I know next to nothing about Renderman, It is workable of course, just define enough suitable input and output parameters and use those predefined names in your shader but it feels a bit restrictive. Anyway, it is a very promising step. I am studying the add-on to see if I can tweak the OSL node part and maybe help out the original author. There still isn't but prompted by a question I decided to implement it in an ugly way; after all, if it works that is all that matters :-). All the necessary code to implement different distance metrics is already in node_texture.h but for some reason it was commented out. I therefore lifted the necessary part from this file and combined it with a a small shader that lets you choose the distance metric with an integer. An example for the Manhattan metric is shown below.

E that can be used for the generalized Minkovsky metric (metric == 6).

shader chaosmosaic(

point Pos=P,

string Image="",

int M=10,

output color Color=0

){

float x,y;

x=mod(Pos[0],1);

y=mod(Pos[1],1);

float xi=floor(x*M);

float yi=floor(y*M);

// mapping here

float xp=noise("cell",Pos,xi*M+yi);

float yp=noise("cell",Pos,xi*M+yi+M*M);

float xoffset = mod(mod(x,1.0/M)+xp,1);

float yoffset = mod(mod(y,1.0/M)+yp,1);

Color = texture(Image,xoffset,yoffset);

}

In the code above M is the number of squares in each direction in our uv map. We use it to calculate two indices (xi, and yi) that are subsequently used pick a random position in the texture (xp,yp). The pixel from the texture is then selected according to the relative offset in the small square inside the uv map (xoffset, yoffset). The original algorithm also uses a blending function between adjacent tiles form the textures but that will blur the image. In this case we didn't implement that because from far enough away the small tile edges aren't noticable but the large scale repetion has gone away so with very little code we already have a better result.

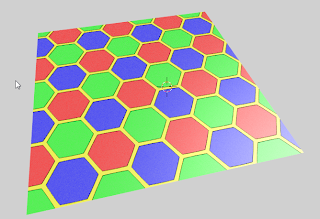

// distance to nearest edge

float x = mod(Coordinates[0]/3,1.0);

float y = mod(Coordinates[1]/3,A2);

#define N 18

vector hc[N] = {

vector( 0, -A2/3 ,0),

vector( 0, 0 ,0),

vector( 0, A2/3 ,0),

vector( 0,2*A2/3 ,0),

vector( 0, A2 ,0),

vector( 0,4*A2/3 ,0),

vector(0.5, -A2/3+A2/6,0),

vector(0.5, 0+A2/6,0),

vector(0.5, A2/3+A2/6,0),

vector(0.5,2*A2/3+A2/6,0),

vector(0.5, A2 +A2/6,0),

vector(0.5,4*A2/3+A2/6,0),

vector(1.0, -A2/3 ,0),

vector(1.0, 0 ,0),

vector(1.0, A2/3 ,0),

vector(1.0,2*A2/3 ,0),

vector(1.0, A2 ,0),

vector(1.0,4*A2/3 ,0)

};

float d[N], t;

for(int i=0; i < N; i++){

float dx = x - hc[i][0];

float dy = y - hc[i][1];

d[i] = hypot(dx, dy);

}

for(int j= N-1; j >= 0; j--){

for(int i= 0; i < j; i++){

if(d[i] > d[i+1]){

SWAP(t, d[i], d[i+1]);

}

}

}

Center = d[0];

Edge = d[1] - d[0];

InEdge = Edge < Width;

The approach we have taken is very simple: the hc enumerates all nearby hexagon centers. We then calculate all the distances to these points and sort them shortest to longest (yes with a bubble sort: with 18 elements it might just be faster to do it with a more efficient sorting algorithm at the cost of much more complex code so I don't bother). Edge is not realy the distance to the closest edge but the difference between the closest center and the next closest. Near the edge these values are more and more the same so Edge will approach zero. For convience we provide a comparison with some threshold also.

shader edgedecay(

point Pos = P,

float Edge = 0.1,

float Power = 1,

float Density= 10,

float Length = 1 - Edge,

output float Fac=Density

){

float h = Pos[2];

if(h>Edge){

float d = (h - Edge)/Length;

if(Power > 0 && d < 1){

Fac = Density * ( 1 - pow(d,Power));

}else{

Fac = 0;

}

}

}

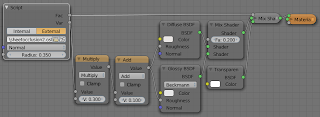

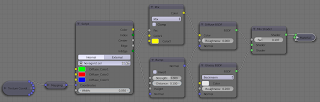

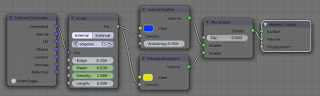

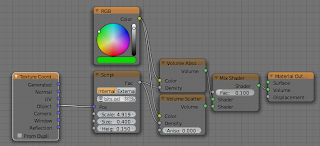

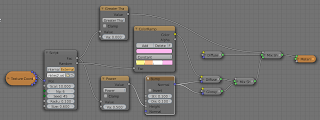

The node setup for the videoclip is:

Things are getting interesting now that more major players start supporting OSL. This week Pixar announced the support for OSL in Renderman and a few months ago Chaosgroup released a version of V-Ray with OSL integration.

Not all integrations are equal, for example the one in Renderman doesn't appear to support closures (bsdf's), but nevertheless, now there is a huge potential to share programmatical patterns and textures between V-Ray, Renderman and Blender. I am quite exited and can't wait to see how this will foster the reuse of shaders across platforms. To illustrate the portability: some of the shaders provided as examples on the V-Ray page work on Blender without a single change, while others need some tweaks, mainly because each renderer provides its own set of closures (for eaxmple, Blender does not have the phong() closure) and more importantly, the output model is not identical: the V-Ray shaders don't provide closure outputs, but simply assign to the Ci variable, something that on Blender has no effect.

If you would like to know more about programming OSL you might be interested in my book "Open Shading Language for Blender". More on the availability of this book and a sample can be found on this page.

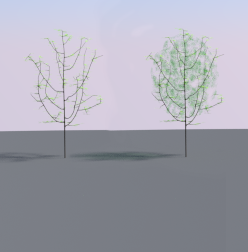

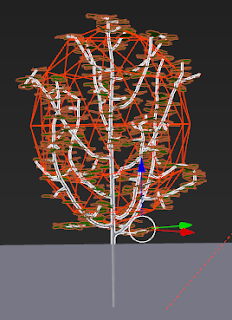

indisk function with the position of this closest point and a random direction. indisk checks whether we are inside a disk with its axis in some direction and returns 1 if this is indeed so. Note that the include at the start of the code refers to a file that is distriubted with Blender and contains a number of useful functions, including a number of Voronoi/Worley related ones.

#include "node_texture.h"

int indisk(

point p,

point c, float r, float h, vector d

){

vector v = p - c;

float a = dot(v,d);

float lh = abs(length(a * d));

if(lh > h){ return 0;}

float lv = length(v);

float lp = sqrt(lv*lv - lh*lh);

if(lp > r){ return 0;}

return 1;

}

vector randomdirection(point p){

float t = M_2PI*noise("cell",p,1);

float u = 2*noise("cell",p,2)-1;

float s,c,a;

sincos(t,s,c);

a = sqrt(1-u*u);

float x = a*c;

float y = a*s;

float z = u;

return vector(x,y,z);

}

shader bits(

point Pos = P,

float Scale = 1,

float Size = 1,

float Height = 0.05,

output float Fac = 0

){

point p = Pos * Scale;

point centers[4];

float distances[4];

voronoi(p, "Distance Squared", 0, distances, centers);

if(indisk(p,centers[0],Size,Height,randomdirection(p))){

Fac = 1;

}

}

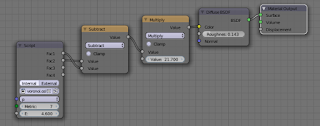

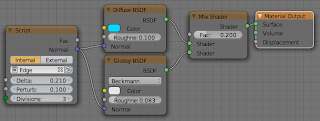

The node setup to use this shader as seen in the opening image of this article looks like this:

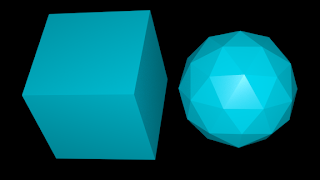

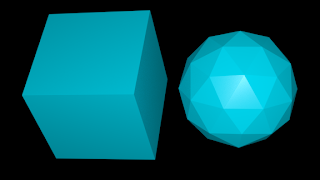

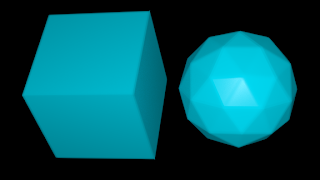

Perturb = 0.1. Subtle but clearly noticable.

Perturb = 1.0 is far less subtle but shows how much roundedness is possible. All images were produced with Divisions = 3, i.e. 27 sample rays (see below).

#define BIG 1e6

shader edge(

float Delta = 0.01,

float Perturb = 0.001,

int Divisions = 2,

output float Fac = 0,

output normal Normal = N

){

vector origin = P-Delta*N;

float count = 1;

for(int x=0; x < Divisions; x++){

for(int y=0; y < Divisions; y++){

for(int z=0; z < Divisions; z++){

vector d = noise("perlin",vector(x+0.1,y+0.1,z+0.1));

vector np = N + Perturb * d;

if(trace(origin, np)){

float hdb = 0;

normal nb = 0;

getmessage("trace","hitdist",hdb);

// versions before 2.69.11 4-Mar-2014 crash on next statement

getmessage("trace","N",nb);

Normal -= nb;

count += 1.0;

}

}

}

}

Normal = normalize(Normal/count);

Fac = count/(1+Divisions * Divisions * Divisions);

}

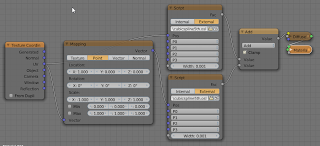

We choose an origin point a bit below the surface by descending along the normal [line 10], generate perturbed normals [line 15-16], trace these normals [line 16] and if we have a hit we retrieve the normal at the hit point [line 22]. Because we are below the surface/ inside the mesh this normal will point inward so we subtract it from our sum of normals (Normal). Finally we divide the sum of normals by the number of hits [line 30]. Note that we also get the hit distance [line 20] but don't use that value. We could check this againt some limit to reduce artifacts on concave meshes for instance.getmessage() function. This appears to be fixed in the latest builds but be aware.P and N respectively but of course if necessary this can be changed quite easily. The setup shown below is the exact setup used for the example images at the start of the page.

t that satify the relation dB(t)/dt . (B(t) - P) = 0, or in English: those values of t whre the tangent to the spline is perpendicular to the vector pointing to the point being shaded (remember that the dot product of two vectors is zero if they are perpendicular). point type and built-in dot() make for a lot less code.

FindRoots() which is nontrivial and needed to be reimplemented in a non-recursive form.

#define POP wtop+=6;wn--

int FindRoots(point w0[6], float t[5]){

point w[STACKSIZE];

int wtop=0;

int wend=0;

int wn=0;

for(int k=0;k<6 br="" k="" w0="" w="" wend=""> wn++;

int nc=0;

while(wn>0){

//printf("[%d]",wn);

int cc=CrossingCount(w,wtop); // w[:5]

if(cc==0){

POP;

continue;

}

if(cc==1){

if(wn>=MAXDEPTH){

t[nc++]= (w[wtop][0]+w[wtop+5][0])/2;

//printf(" MAX ");

POP;

continue;

}

if(ControlPolygonFlatEnough(w,wtop)){ // w[:5]

t[nc++]= ComputeXIntercept(w,wtop);

//printf(" FLAT (%.1f | %.1f)",w[wtop],w[wtop+5]);

POP;

continue;

}

}

// not flat or more than one crossing

DeCasteljau(w,0.5,wtop,wend); //left=[wend:wend+5], right=[wend+6;wend+11]

for(int k=0;k<6 br="" k="" w="" wend="" wtop=""> wn++; wend+=6;

}

return nc;

}

A fifth order bezier curve has 6 control points and at most 5 roots. The control points are passed as the w0 argument and the t array will receive the root values. The function's return value will be the number of roots found. w array comes in. wn to reflect that we now have one set on the stack. At this point wtop (the index of the first control point in the topmost set) is 0, and wend (the index of the first free slot in w) is 6. nc holds the number of roots found sofar. wend in the w array. The save space we copy the second set to top of the stack (because the control points for the original curve are no longer needed), which means we only have to increase the stacksize wn by one. Seed. Using the object info node we may obtain a unique random number for each object that we may use for this purpose as we will see when we examine the node setup. pattern(). Note that because we expect uv-coordinates for a simple square (I.e in de range [0,1], we transform these coordinates so that the center is at (0.5, 0.5) [line 68].

#define SLOPE 1/sqrt(3)

#define SIDE sqrt(.75)

#define D60c cos(radians(60))

#define D60s sin(radians(60))

void hex(float x, float y, output float hx[6], output float hy[6]){

hx[0]=x;

hy[0]=y;

hx[1]=x*D60c-y*D60s;

hy[1]=y*D60c+x*D60s;

hx[2]=x*D60c+y*D60s;;

hy[2]=y*D60c-x*D60s;

hx[3]=-hx[0];

hy[3]=-hy[0];

hx[4]=-hx[1];

hy[4]=-hy[1];

hx[5]=-hx[2];

hy[5]=-hy[2];

}

int in_hexagon(

float px, float py,

float cx, float cy, float r,

output float d

){

d=hypot(px-cx,py-cy);

if(d>r){ return 0; }

float hx[6],hy[6];

hex(px-cx, py-cy, hx, hy);

for(int h=0; h < 6; h++){

if((abs(hy[h]) < SLOPE*hx[h]) && (hx[h]< r*SIDE)){

d=abs(hx[h]);

return 1;

}

}

return 0;

}

#define CELL noise("cell",seed++)

float pattern(float x, float y, int Seed, int Kernels){

int seed=Seed;

int n=(int)(1+Kernels*CELL);

float hx=0, maxx=0;

for(int f=0; f < n; f++){

float hy=0;

float r=0.2*CELL;

float d;

if(in_hexagon(x,y, hx,hy,r, d)){

return d;

}

hx=SIDE*CELL;

if(hx>maxx){maxx=hx;}

}

if(x < maxx && abs(y) < 0.01){ return 1; }

}

shader snowflake(

point Pos=P,

int Seed=0,

int Kernels=15,

output float Fac=0

){

float hx[6],hy[6];

hex(2*(Pos[0]-0.5), 2*(Pos[1]-0.5), hx, hy);

for(int h=0; h<6 br="" fac="=0;" h=""> if(abs(hy[h]) < SLOPE*hx[h]){

Fac=pattern(hx[h],hy[h],Seed, Kernels);

}

}

}

Fac output may vary smoothly so we use a color ramp node with constant interpolation the create stepped values that we can use to drive an ice-like bump pattern. The Kernels input determines how many random hexagons are within each snow flake, so this essentially controles how dense the flakes look.

p1, the point that is used to control the curvature of each segment, lies on the line line through the previous control point and the end point. This ensures that each segment joins the previous one smoothly.

#include "equations.h"

#define DOT(a,b) (a[0]*b[0]+a[1]*b[1])

#define SUB(a,b) vector(a[0]-b[0],a[1]-b[1],0)

// determine if point M is inside a rectangle with a margin

int in_rectangle(point M, point a, point b,

vector u, float W, vector v, float linewidth){

point A=a+linewidth*(-u-v);

point B=b+linewidth*(u-v);

point D=B+(W+2*linewidth)*v;

vector AM=SUB(M,A);

vector AD=SUB(D,A);

vector AB=SUB(B,A);

float dotamad=DOT(AM, AD);

float dotadad=DOT(AD, AD);

float dotamab=DOT(AM, AB);

float dotabab=DOT(AB, AB);

return (dotamad > 0 && dotamad < dotadad) &&

(dotamab > 0 && dotamab < dotabab);

}

#define CELL noise("cell", cp, seed++)

#define CELL2 vector(CELL, CELL, 0)

shader wiggles(

point Pos=P,

float Scale=1,

int Number=1,

float Length=0.5,

float LengthVar=0,

float Kink=0,

float Curl=0.2,

float Wave=30, // degrees

int Steps=2,

float StepsVar=0,

float Width=0.02,

float WidthVar=0,

int Seed=0,

output float Fac=0

){

point p = Pos * Scale;

p[2]=0;

point ip= point(floor(p[0]),floor(p[1]),0);

int nn=1+(int)ceil(Steps*Length);

for(int xx=-nn; xx <= nn; xx++){

for(int yy=-nn; yy <= nn; yy++){

int seed=Seed;

point cp = ip + vector(xx, yy, 0);

for(int wiggle=0; wiggle < Number; wiggle++){

vector start = cp + CELL2;

start[2]=0;

vector dir = CELL2 - 0.5;

dir[2]=0;

dir = normalize(dir);

vector perp = vector(dir[1],-dir[0],0);

float k=0.5 + Kink * (CELL-0.5);

float c=Curl*(CELL-0.5);

point p1=start+k*dir+c*perp;

for(int step=0; step < Steps; step++){

vector ldir = dir;

ldir *= Length + LengthVar*CELL;

point end=start+ldir;

if(in_rectangle(p, start, end, dir, c/2, perp, Width+WidthVar)){

float d,t;

if(splinedist(start, p1, end, p, d, t)){

float localwidth = Width+WidthVar*noise("uperlin",start,t);

if(d < localwidth){

Fac = (localwidth - d)/localwidth;

return;

}

}

}

if(CELL < StepsVar){

break;

}else{

p1 = end + (end - p1)*(1+noise("perlin",end)*Kink);

start = end;

dir = rotate(dir, radians(Wave*noise("perlin", start)), vector(0,0,0), vector(0,0,1));

}

}

}

}

}

}

The only other issue that needs attention is the generation of random numbers. In each cell we need a number of them and they need to be unique. We therefore add an extra seed argument to the call to noise. However, we must take care that all those numbers are generated in a repeatable way so we reset this seed for each cell to the value provided by the Seed input. This allows us to generate unique patterns for different objects sharing the same material.

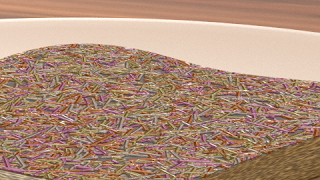

Fac output isn't simply 1 or 0 but contains the distance to the edge of the fiber and we use that to drive a bump node (through a square root math node (power 0.5) to give it a smoothly curved appearance). We use the object info node to generate a random number because the heap of noodles consists of three separate squashed half spheres. The shader expects an integer so we multiply the random value by a million to get a unique integer. splinedist() by hand. Did did indeed shave off some small percentage but the biggest win was the conversion of all calculations to two dimensions and the test to see if we are within the bound of the control rectangle before actually checking the distance to the spline (line 72 in the code) equations.h so the shader can be used as is, without external dependencies.

distance() function.cellnoise() function is deprecated and we should use noise("cell", ...) in stead.stdosl.h is automatically included by Blender already so there is no need for us to include it again.

shader sprinkles(

point Pos = P,

float Scale = 1,

int Np = 1,

int Seed = 42,

float Radius = 0.05,

float Size = 1,

output float Fac = 0,

output float Random = 0

){

point p = Pos * Scale;

point f = floor(p);

int xx,yy,np;

vector one = 1;

for( xx=-1; xx<=1; xx++){

for( yy=-1; yy<=1; yy++){

point ff = f + vector(xx,yy,0);

float u=Seed;

for( np=0; np < Np; np++){

vector pd1 = 2*noise("cell",ff,u)-one;

vector pd2 = 2*noise("cell",ff,u+1)-one;

point p1 = ff + pd1;

point p2 = ff + pd2;

p2 = (p2 - p1)*Size+p1;

// reduce to 2D

p1[2]=0;

p2[2]=0;

p [2]=0;

float r = distance(p1,p2,p);

if ( r < Radius ) {

Fac = 1 - r/Radius;

Random = noise("cell",ff,u+2);

}

u+=3;

}

}

}

}

The node setup used to make the image at the top looks like this:

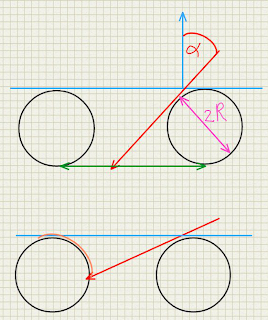

α is so large that cos(α) < 2r there will be no transmission at all.

shader sheet_occlusion2 (

normal Normal=N,

float Radius=0.05,

output float Fac=1,

output float Var=1

){

// calculate angle of incidence

float alpha = acos(dot(I,Normal));

// treat front and back the same

alpha = alpha > M_PI_2 ? M_PI - alpha : alpha;

// calculate the non occluded fraction

Fac = cos(alpha) - 2 * Radius;

// calculate the range of the visible normals

if( Fac < 0 ){

Fac = 0;

Var = cos(alpha) / (2 * Radius);

}

}

And the sample node setup mixes transparent shader with a non transparent shader just like before but uses the Var output to modify the shininess of the non transparent shader: