The idea is simple enough: just create a map for each position at the inside of the mesh with a value that represents how much light would be received at that point from nearby surfaces. Sounds a lot like ambient occlusion so the idea is to use ambient occlusion baking but with the normals inverted. The only snag is that inside a mesh it is extremely unlikely that a ray will ever reach the sky (even impossible if the mesh is watertight), resulting in a black map. However, Blenders AO settings have a distance parameter that can be used to tell the AO baking that any ray that does not hit a surface within this distance is considered sky:

(Note that we do not even have to enable AO for our purpose, i.e. baking, just setting the distance to 0.1 or something will suffice)

Now we can create a map that approximates translucency with the following steps:

- Invert the normals on the mesh (they should all point inward)

- Bake the ambient occlusion to an image texture (documented here)

- Make sure you point the normals to the outside again

- Use the inverted values of the image as a translucency map.

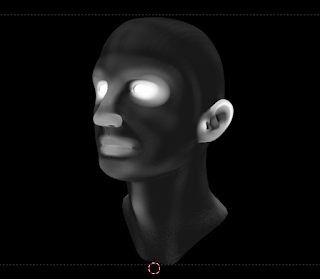

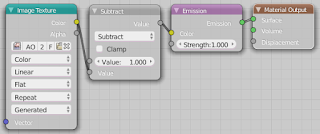

The noodle that uses this map to illustrate their values with an emission shader is shown below

Now if this is really useful to for example tweak a subsurface scattering shader is up to you :-) If you create a skin shader with it I am eager to see the results.

No comments:

Post a Comment